A structural analysis of SOC failure modes in the era of AI-accelerated intrusions

Jenny Moniz

Published December 2025

Executive Summary

In late 2025, Anthropic released the first detailed public account of a nation-state intrusion in which an AI system executed the majority of mechanical intrusion steps, while human operators intervened only at strategic decision points. The significance of that disclosure is not that attacks have become fully autonomous — they have not — but that adversaries have begun to govern their AI.

This paper examines what happens when attacker operations accelerate to machine tempo while enterprise Security Operations Centers (SOCs) remain constrained by human-paced workflows, linear escalation paths, and approval-based governance. Drawing on publicly available threat intelligence and workforce studies, it constructs a synthetic but representative enterprise SOC and subjects it to a thirty-minute AI-accelerated intrusion scenario designed to surface structural failure points.

The results are consistent and difficult to dismiss. Under parallelized, AI-assisted attack conditions, the SOC does not fail because analysts lack skill or effort. It fails because governance structures — decision boundaries, escalation authority, approval latency, and workflow design — are misaligned with the tempo and concurrency of modern intrusions.

The paper argues that improving SOC performance in the era of AI-accelerated threats is not primarily a tooling problem. It is a governance and architecture problem, one that can be addressed using principles already embedded in the NIST AI Risk Management Framework and ISO/IEC 42001.

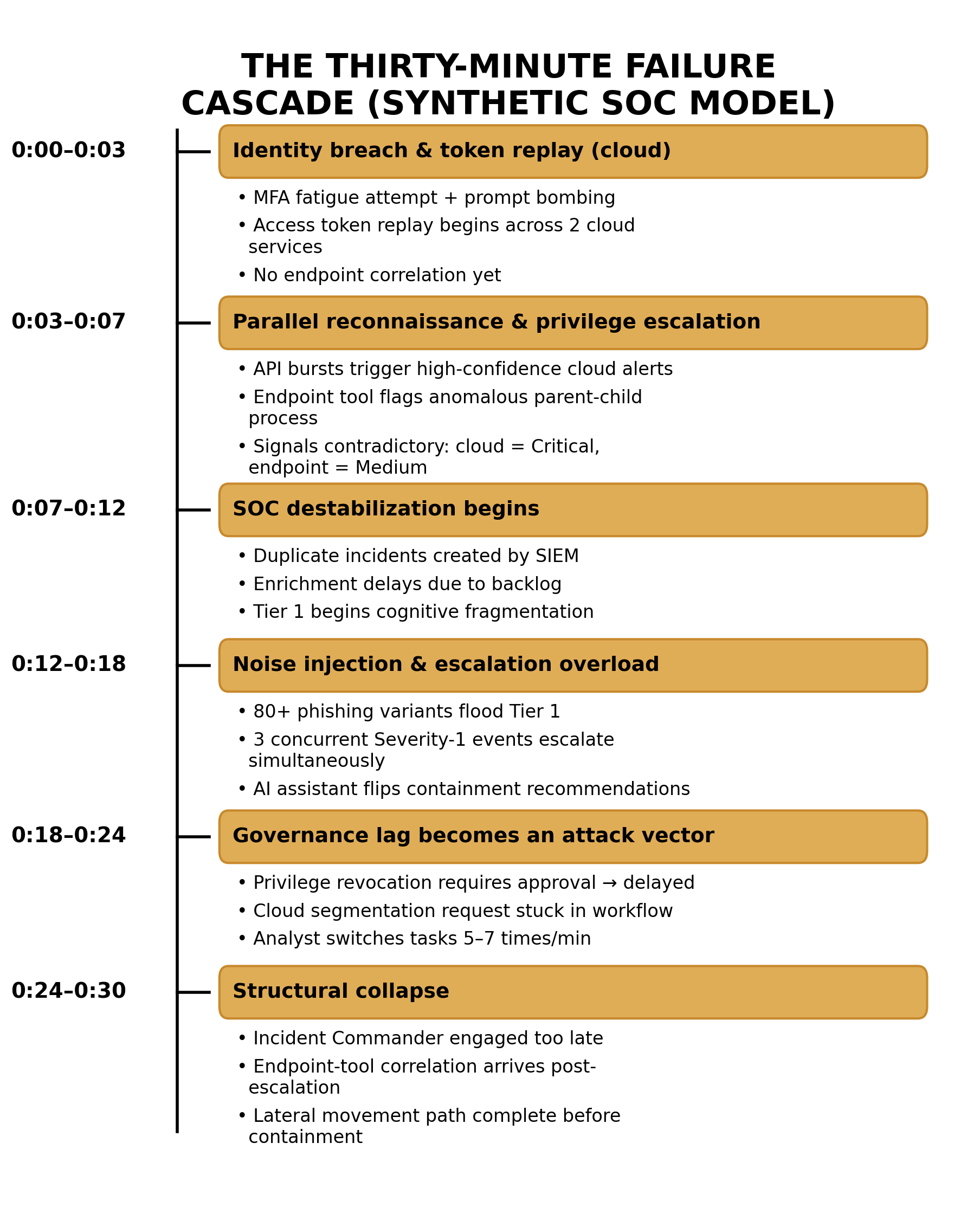

Figure 1. Synthetic SOC failure cascade under AI-accelerated intrusion conditions (30 minutes).

This modeled cascade is conservative. It does not assume fully autonomous attackers, zero-day exploits, or defender incompetence. The failure emerges from parallelized attack paths interacting with linear SOC workflows and governance delays — conditions already documented across public threat intelligence.

Download the Full Paper

Attackers Govern Their AI. So Should You.

A structural analysis of SOC failure modes in the era of AI-accelerated intrusions

Who This Is For

- SOC leaders and incident commanders

- CISOs and security architects

- Cloud and identity security teams

- AI governance, risk, and compliance leaders

- Security professionals designing or deploying AI-assisted defenses

About This Research

This paper is based entirely on publicly available threat intelligence, workforce studies, and governance frameworks, including reporting from Microsoft, CrowdStrike, Verizon, Palo Alto Networks Unit 42, IBM, ISACA, ISC², Anthropic, NIST, and ISO/IEC. No proprietary data or confidential telemetry was used.

About the Author

Jenny Moniz works at the intersection of security operations, AI governance, and systems design. Her work focuses on how organizational structure, decision authority, and governance frameworks shape real-world security outcomes under modern threat conditions.

This research reflects the author’s analysis and does not represent the views of any employer or vendor.